Calling teachers and students once a week almost doubled the impact on reading and maths test scores of an adult education programme in Niger

Almost 1 billion adults worldwide are unable to read or write in any language, with an estimated cost of US$ 5 billion annually to low-income countries (Cree et al 2012, UNESCO 2020). While policymakers and academics alike have primarily focused on primary and secondary education programmes, public sector spending on adult education programmes remains significant. In India alone, the government has spent more than US$ 1.4 billion on the national Saakshar Bharat adult literacy campaign since 2009,1 with similar levels of spending on adult literacy programmes in Brazil. The widespread growth of these programmes in recent decades has generated hope for improving learning outcomes and immediate private and social returns among adult learners, as compared to investments in primary education (UNESCO 2009, World Bank 2010).

Despite this potential, there is a relative paucity of research on adult learning. Existing evidence suggests that adult literacy programmes are often characterised by low and volatile attendance, high drop-out rates, limited skill attainment, and rapid skill depreciation (Romain and Armstrong 1987, Royer et al. 2004, Rogers 1999, Abadzi 1994, Oxenham et al 2002, Ortega and Rodríguez 2008, Aker, Ksoll and Lybbert 2012). Potential reasons for this include higher opportunity costs for adults, the irrelevancy of such skills in daily life, or poorly-adapted pedagogical approaches. Another key and notable challenge is teacher absenteeism. In fact, in earlier work in Niger, teachers only taught approximately one-third of their courses (Aker et al. 2012). And Niger is not alone. According to a 2013 report by Transparency International, primary school teacher absenteeism ranged between 11 and 30% (Transparency International 2013, Mbiti 2016).

Does monitoring work?

In an effort to address absenteeism, a number of governments and non-governmental organisations (NGOs) have attempted to strengthen the monitoring of teachers. Teacher monitoring, especially coupled with financial incentives, has been found to reduce teacher absenteeism in primary schools (Duflo et al. 2012, Cueto et al. 2008, Muralidharan et al. 2017, Cilliers et al. 2018). Yet improving teacher accountability continues to be a significant challenge for two reasons. First, in countries with limited infrastructure and weak institutions, the costs of monitoring are particularly high. And second, some evidence suggests that material and financial incentives can actually crowd out intrinsic motivation, potentially leading to negative or no effects on teachers’ efforts.

Using technology to teach and monitor

Motivated by these questions and hypotheses, in our research (Aker and Ksoll 2019), we study how simple mobile phone technology, such as phone calls, could affect teachers’ behaviour and improve learning in the context of an adult education programme in Niger. Based upon earlier work on using mobile phones as a pedagogical tool, we worked with an NGO , the Ministry of Education, and a research partner to implement this mobile phone intervention.

Across 134 villages in two regions in Niger, we randomly assigned villages to one of two interventions or a control:

- The control group did not participate in any adult education intervention over a two-year period.

- The treatment group participated in an adult education programme, which involved literacy and numeracy training over five months (between January and June) over a two-year period. In-person monitoring of teachers occurred, although sporadically.

- A second treatment group received the same programme as the first, but the teacher, two randomly chosen adult education students, and the village chief received weekly phone calls for approximately six weeks, asking about the teachers’ presence in class. No financial incentives or mobile phones were provided to any of the respondents.

To measure the impact of these interventions, we assessed a range of outcomes, including students’ learning, attendance, and self-esteem, as well as teacher absenteeism, motivation, and preparedness for class. We collected this information before and after the intervention for both years of the programme.

Calling works

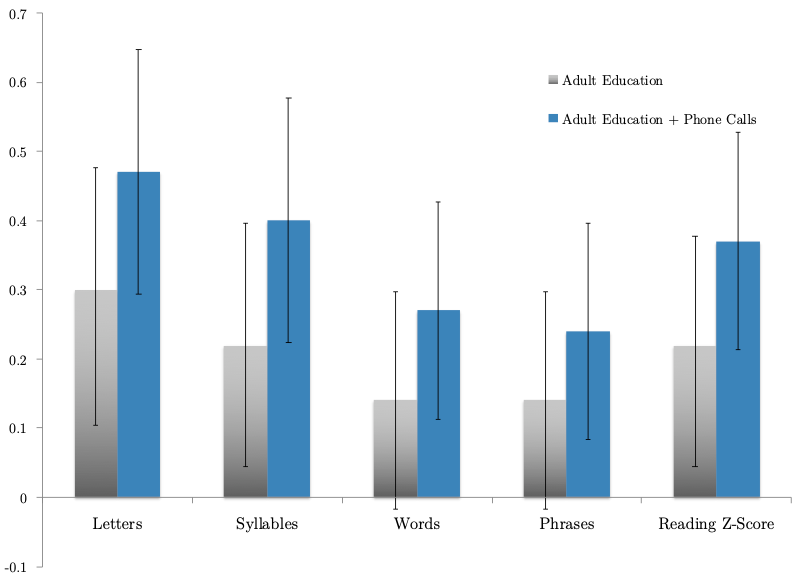

Our study found that simply calling teachers, students, and the village chief once a week over six weeks per year significantly increased adult students’ reading and math test scores as compared with those students in the standard adult education programme. While students in the adult education programme alone improved their reading and math test scores by 0.19-0.22 standard deviation as compared with the control group, the calling intervention increased students’ test scores by an additional 0.12-0.19 standard deviations, an almost doubling of test scores (Figure 1). These effects were stronger in the first year of the programme.

Figure 1 Reading z-scores across years

Notes: This graph shows the reading z-scores for different reading tasks (reading letters, syllables, words, phrases and all) amongst students in different intervention groups, plus the control. The data were collected at the end of the first and second years of the programme. The mean of the control group is zero, and hence not shown on the graph.

What explains these results? Qualitative work suggests that teachers were split on whether the calls were primarily a tool for monitoring or for providing support and encouragement. While we did not find any impact of the phone calls on teacher attendance (in part due to spotty attendance records), there was some evidence that teachers were more prepared for class, as measured by their performance on the Ministry’s preparation exam after the first year. Thus, concerns about crowding out motivation seem to be unfounded.

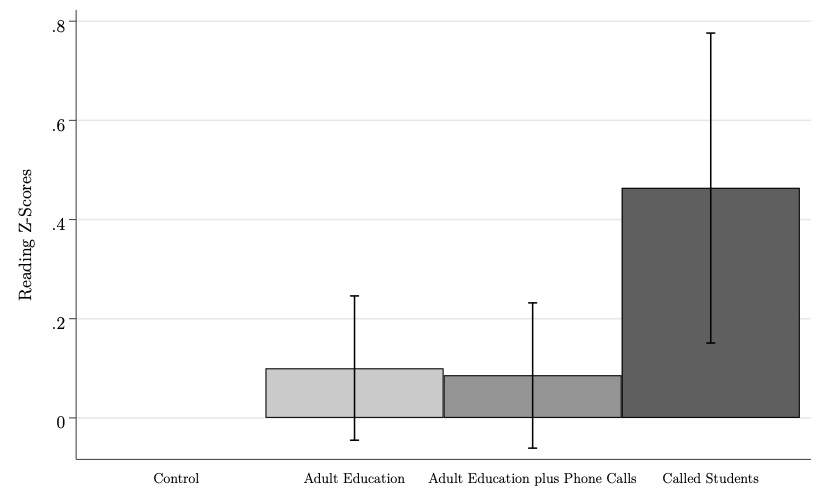

On the student side, students in called villages were less likely to drop out of the course as compared to those in non-called villages. In terms of learning, the effects on called students were two and four times larger than for non-called students during the intervention. These learning gains persisted amongst called students six months after the program (Figure 2), while the learning gains amongst non-called students in villages that received the phone calls depreciated over time. This could be explained by the level of learning achieved during the intervention: Neither intervention was successful in raising students’ scores to threshold reading level required for automaticity, a key indicator for sustained learning. As a result, further research is required on understanding the mechanisms behind the impacts on called students.

Figure 2 Reading z-scores six months after the end of the programme

Notes: This graph shows the composite reading z-scores amongst students in the different intervention groups six months after the end of the programme. The “Adult Education” group shows the mean reading z-scores amongst all students in adult education (only) villages. The “Adult Education plus Phone Calls” group shows the mean reading z-scores amongst non-called students in the villages that were assigned to the phone calls. The mean of the control group is zero, and hence not shown on the graph.

Policy implications

Our results have a number of implications for understanding whether and how the simple technology can influence teachers’ and students’ behaviours, as well as learning outcomes in a resource-constrained setting with weak institutions.

Our evidence suggests that merely calling educational stakeholders about teacher attendance can change behaviour and learning outcomes. However, the findings also highlight the difficulty in assessing the mechanisms behind these effects. The fact that we do not observe a decline in teacher absenteeism may be due in part to the challenge of accessing high-quality absenteeism data without a separate intervention. Yet the improvement in teachers’ preparedness suggests that phone calls alone can motivate students and teachers.

Given the magnitude of the impacts across years, and the sustained results for called students, these results are highly cost-effective. When compared to standard adult education programme in Niger, which cost US$20 per student, the mobile phone intervention cost an additional $3 per village. Given that the effects persisted amongst called students, this would amount to an additional $0.75 per called student.

At the same time, the next generation of adult literacy programmes would benefit from further experimentation. Specifically, we believe it is worth experimenting with calling a larger number of students within a class, rotating calls to different students, or linking the scripts and calls more explicitly to performance evaluations or pedagogical support.

References

Abadzi, H (1994), "What We Know About Acquisition of Adult Literacy: Is There Hope?," World Bank Discussion Paper ix.

Aker, J C, C Ksoll and and T J Lybbert (2012), “Can Mobile Phones Improve Learning? Evidence from a Field Experiment in Niger”, American Economic Journal: Applied Economics 4(4): 94-120.

Aker, J C and C Ksoll (2019), “Call me educated: Evidence from a mobile phone experiment in Niger”, Economics of Education Review 72: 239–257 .

Cilliers, J, I Kasirye, C Leaver, P Serneels, and A Zeitlin (2018), “Pay for locally monitored performance? A welfare analysis for teacher attendance in Ugandan primary schools.”

Cueto, S, M Torero, J León, and J Deustua (2008), “Asistencia docente y rendimiento escolar: el caso del programa META” (“Teacher support and school accountability: The META program”), GRADE Working Paper No. 53.

Cree, A, A Kay and J Steward (2012), The Economic and Social Cost of Illiteracy: A Snapshot of Illiteracy in a Global Context, World Literacy Foundation.

Duflo, E, R Hanna and S Ryan (2012), “Incentives Work: Getting Teachers to Come to School”, American Economic Review 102(4): 1241-1278.

Mbiti, I ( 2016), “The Need for Accountability in Education in Developing Countries”, Journal of Economic Perspectives 30(3): 109-132.

Muralidharan, K, J Das, A Holla and A Mohpal (2017), “The Fiscal Cost of Weak Governance: Evidence from Teacher Absence in India”, Journal of Public Economics 145: 116-135.

Ortega, D and F Rodríguez (2008), "Freed from Illiteracy? A Closer Look at Venezuela’s Mision Robinson Literacy Campaign", Economic Development and Cultural Change 57: 1-30.

Oxenham, J, A H Diallo, A R Katahoire, A Petkova-Mwangi and O Sall (2002), Skills and Literacy Training for Better Livelihoods: A Review of Approaches and Experiences, World Bank.

Rogers, A (1999), “Improving the quality of adult literacy programmes in developing countries: The `real literacies' approach”, International Journal of Educational Development 19(3): 219-34.

Romain, R and L Armstrong (1987), Review of World Bank Operations in Nonformal Education and Training, World Bank.

Royer, J M, H Abadzi and J Kinda (2004), “The impact of Phonological-Awareness and Rapid-Reading Training on The Reading Skills of Adolescent and Adult Neo-Literates”, International Review of Education 50(1): 53-71.

Transparency International (2013), The Global Corruption Report: Education, Routledge.

UNESCO (2009), Global Report on Adult Learning and Education.

World Bank (2010) "Adult learning (English)", Communication for Governance and Accountability Program (CommGAP).

Wuepper, D and T J Lybbert (2017), “Perceived Self-Efficacy, Poverty and Economic Development.” Annual Review of Resource Economics 9: 383-404.

Endnotes

1 See http://unionbudget2017.cbgaindia.org/education/saakshar_bharat.html, http://saaksharbharat.nic.in/saaksharbharat/homePage and http://www.unesco.org/uil/litbase/?menu=9&programme=132