Microcredit trials show only moderate variation in effects across different settings, suggesting that the average effects of these loans are small

The idea that giving small loans to poor households would help them escape poverty was once considered so compelling that it won Mohammed Yunus the Nobel Peace Prize. The global microloan portfolio is now worth over $102 billion and is growing yearly (Microfinance Barometer 2017). Yet microcredit now enjoys so little support among academics and policymakers that the Washington Post recently felt the need to assure us that “microcredit isn’t dead”. One factor in that dwindling support has been the results of academic studies: seven different randomised controlled trials (RCTs) had found little evidence that increasing access to microcredit caused any major change in household business profits, income, or consumption (Angelucci et al. 2015, Attanasio et al. 2015, Augsburg et al. 2015, Banerjee et al. 2015b, Crepon et al. 2015, Karlan and Zinman 2011, Tarozzi et al. 2015).

But, despite widespread agreement that RCTs are a rigorous method for estimating these effects, the research and policy community disagreed on how to interpret these results. Many noted that the estimated effects of microcredit on households seemed to be different in different studies (Pritchett and Sandefur 2015). In that case, what can we really learn from any single RCT, or even a set of RCTs, about what microcredit does in other places? On what basis could the evidence provided by these randomised trials be used to conclude anything about microcredit in general, that we could expect to replicate in another setting?

The ‘external validity’ problem

This question is related to a phenomenon, found in all of social science, known as ‘external validity’ – the extent to which results from studies in one setting are valid for inferences in different settings (Allcott 2015, Pritchett and Sandefur 2015, Vivalt 2016).

This affects what we can really learn from these studies. The degree of variation in effects across settings captures the extent of the problem – large variations in effects means that predictions for new settings may be infeasible or computed only with great uncertainty, while small to moderate variations means the studies may yield generalisable knowledge with less uncertainty. The relevant question is not whether the effects vary across settings but by how much they vary.

However, there is information about this variation across settings contained in the set of studies we already have. After all, we can see the variation – or lack of it – in the estimated effects. In many cases, using the data from all the studies we have about a similar policy intervention, we can calculate the statistical uncertainty resulting from cross-study variation and thus understand how well the effects predict each other in existing studies. This gives us information about how well we can predict effects in new settings.

Challenges of quantifying uncertainty when effects vary across settings

But there are statistical challenges involved in estimating the differences in effects across settings. The variations within studies are part of the reason for heterogeneity in reported effects across studies, given that researchers randomly select individuals and then randomly ascribe those individuals (or villages) to either treatment or control groups. That variation compounds the cross-study variation so the published estimated effects look more heterogenous than the underlying effects really are.

Bayesian hierarchical models (BHMs) can provide a good estimate of the underlying variation. These models incorporate levels of data into a hierarchical structure which splits out the variation at the cross-study or ‘general level’, and corrects for the sampling variation within each study at the ‘data level’ (Gelman et al. 2004, Rubin 1981, Efron and Morris 1975). Although these models can be estimated in many ways, Bayesian methods often perform better especially when we have so few studies (Chung et al. 2013, Chung et al. 2015).

To estimate how much the effect of microcredit varies across studies, and how uncertain we should be about the effect of expanding access to microloans in new settings, I perform a Bayesian hierarchical analysis of the microcredit literature (Meager 2019). I examine the effect of access to microcredit on household business profit, expenditures, and revenues, to see if microloans allow poor entrepreneurs to grow their businesses and increase profit (Yunus 2006). Yet households may benefit from microcredit in other ways, such as increased total consumption, shifting to spending on consumer durables, or decreasing spending on ‘temptation’ goods such as cigarettes due to greater hope for the future (Banerjee 2013). I examine these variables as well.

Average effects are small and uncertain

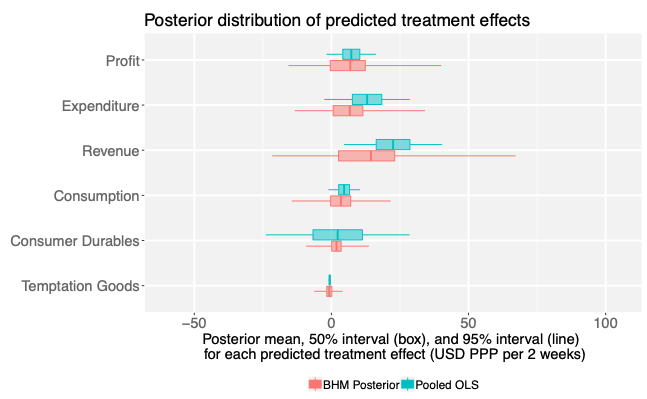

I find that, in general, the effects on these outcomes are likely to be small and uncertain, around 7% of the average control group’s mean outcome. The sign of each effect suggests beneficial effects on all outcomes, which is encouraging. But there is a reasonable probability of no effect, due to uncertainty both within and across studies. Figure 1 shows the predicted effects of microcredit on each outcome, and the uncertainty intervals based on the Bayesian posterior distribution (“posterior” in the sense of arising after taking into account the relevant evidence) for each, as well as the predicted effects using classical meta-analysis techniques (via full pooling) which do not account for cross-study variation when estimating uncertainty. Overall, there is little evidence that microcredit harms borrowers as was feared by some of its critics, but there is also little evidence of the transformative positive effects initially claimed by its advocates.

Figure 1 Predicted effects of microcredit in future settings

Note: BHM estimates incorporate information about uncertainty in effects across settings, Pooled OLS (a full-data implementation of a classical meta-analysis approach) does not.

Variation in effects across settings is moderate

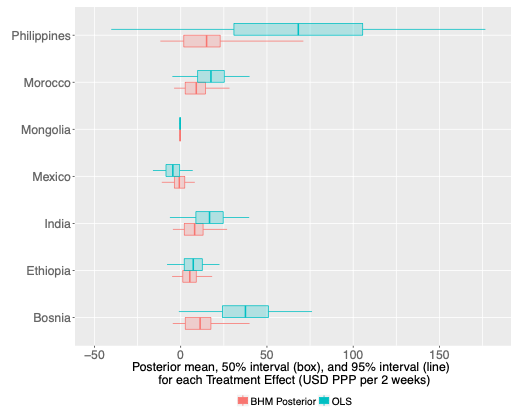

The BHM results show that variation in effects is moderate compared to the average effect size itself, and compared to the sampling variation within each study. On average, across different metrics and outcomes, approximately 60% of the initially observed variation in microcredit effects was actually due to sampling variation. The genuine variation in effects across studies is thus smaller than previously thought (Pritchett and Sandefur 2015, Vivalt 2016). Figure 2 shows how the variation changes from the original study estimates to the BHM posterior estimates.

Figure 2 Original OLS estimates of effects of microcredit on household profit in each setting (listed by country) computed separately compared to BHM posterior estimate computed with all data

While there is still some moderate variation in effects, these RCTs appear to be reasonably externally valid. That does not mean however that we could have ignored the heterogeneity in effects across settings: the uncertainty we should have about the effect in the next setting is indeed larger than it would be if we assumed homogeneous effects, as figure 1 showed.

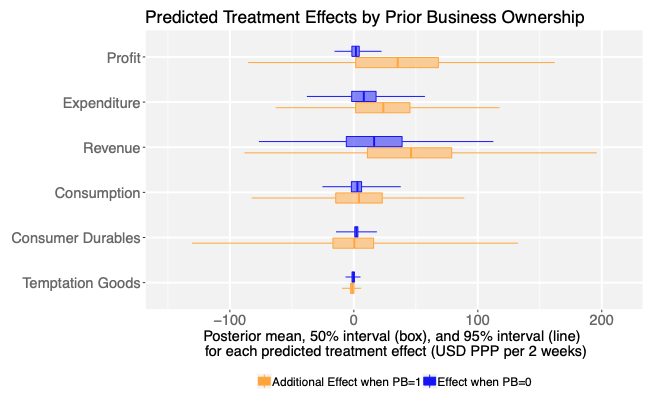

To further understand the possible sources of the variation, I explore the role of covariates at both the household and study level. Some of the authors of the original studies thought, for example, that having previous business experience might signal the capacity to use new lines of credit in a productive way. Because I have all the data I can extend that analysis to all settings. As shown in figure 3, I find that microcredit often has no effect for those households with no previous business experience. By contrast, the effect on households with business experience is larger on average, yet more uncertain and with more variation across settings.

Figure 3 Predicted effects of microcredit split by prior business ownership status

Understanding the role of study-level variables, such as the interest rates or the NGO’s borrower targeting strategy, is much harder. The correlation between any given contextual factor and the effect size can’t be interpreted causally due to the high probability of omitted variables affecting both. Moreover, the correlations are computed from seven data points and there are more than seven important variables – a recipe for statistical overfitting. We must be cautious about what we can learn in this situation. The best I can do is analyse the covariates using methods such as ridge regression to prevent overfitting and assess the relative predictive power of these variables. I find that economic variables such as interest rates predict variation in effects better than differences in study protocols such as the randomisation unit. This suggests that the heterogeneity we do observe reflects genuine differences in effects across settings.

Conclusion

While the environment in which the evidence will eventually be used for making policy decisions is never exactly the same as the environment studied, in the case of microcredit the difference in effects across studies is moderate. These results point to the importance of aggregating evidence across studies while respecting the possibility that a policy or aid intervention might do something different in different places. There is still some uncertainty about the effect of microcredit in future settings, but the worst fears about external validity of RCTs seem unfounded in this case.

References

Allcott, H (2015), "Site selection bias in programme evaluation", The Quarterly Journal of Economics, 130(3), 1117-1165.

Angelucci, M, D Karlan and J Zinman (2015), "Microcredit Impacts: Evidence from a randomised microcredit programme placement experiment by Compartamos Banco", American Economic Journal: Applied Economics, 7(1): 151-82.

Attanasio, O, B Augsburg, R De Haas, E Fitzsimons and H Harmgart (2015), "The impacts of microfinance: Evidence from joint-liability lending in Mongolia", American Economic Journal: Applied Economics, 7(1): 90-122.

Augsburg, B, R De Haas, H Harmgart and C Meghir (2015), "The impacts of microcredit: Evidence from Bosnia and Herzegovina", American Economic Journal: Applied Economics, 7(1): 183-203.

Banerjee, A (2013), "Microcredit under the microscope: What have we learned in the past two decades, and what do we need to know?", Annual Review of Economics, 5(1), 487-519.

Banerjee, A, E Duflo, R Glennerster and C Kinnan (2015a), "The miracle of microfinance? Evidence from a randomised evaluation", American Economic Journal: Applied Economics, 7(1): 22-53.

Chung, Y, A Gelman, S Rabe-Hesketh, J Liu and V Dorie (2015), “Weakly informative prior for point estimation of covariance matrices in hierarchical models”, Journal of Educational and Behavioral Statistics, 40(2), 136-157.

Chung, Y, S Rabe-Hesketh, V Dorie, A Gelman and J Liu (2013), “A nondegenerate penalised likelihood estimator for variance parameters in multilevel models”, Psychometrika, 78(4), 685-709.

Crepon, B, F Devoto, E Duflo and W Pariente (2015), "Estimating the impact of microcredit on those who take it up: Evidence from a randomised experiment in Morocco", American Economic Journal: Applied Economics, 7(1): 123-50.

Efron, B and C Morris (1975), “Data analysis using Stein's estimator and its generalisations”, Journal of the American Statistical Association, 70(350), 311-319.

Gebelhoff, R (2016), “Microcredit isn’t dead”, Washington Post, December 12, 2016

Gelman, A, J Carlin, H Stern and D Rubin (2004), Bayesian data analysis, Second Edition, Taylor & Francis.

Karlan, D and J Zinman (2011), "Microcredit in theory and practice: Using randomised credit scoring for impact evaluation", Science, 10 June 2011: 1278-1284

Meager, R (2019), "Understanding the average impact of microcredit expansions: A Bayesian hierarchical analysis of seven randomised experiments" forthcoming in the American Economic Journal: Applied Economics in January 2019.

Pritchett, L and J Sandefur (2015), "Learning from experiments when context matters", AEA 2015 Preview Papers, accessed online February 2015.

Rubin, D (1981), "Estimation in parallel randomised experiments”, Journal of Educational and Behavioral Statistics, 6(4), 377-401.

Tarozzi, A, J Desai and K Johnson (2015), "The impacts of microcredit: Evidence from Ethiopia." American Economic Journal: Applied Economics, 7(1): 54-89.

Vivalt, E (2016), "How much can we generalise from impact evaluations?", Working Paper, NYU.

Yunus, M (2006) "Nobel Lecture", Oslo, 10 December.