A novel survey method provides plausible deniability to workers reporting harassment in organisations and increased reported levels of threatening behaviour, physical harassment, and sexual harassment at a large Bangladeshi apparel producer

The #metoo movement underscored that decision-makers in organisations, and other interested parties, often lack understanding of the scale and nature of harassment, which makes it challenging to formulate effective policy responses.

Reporting harassment is a difficult step for individuals who have been victimised and for witnesses concerned with possible retaliation and reputational costs. Recent research documents that individuals who come forward often incur severe adverse economic consequences; for example, Adams-Prassl et al. (2023) find that individuals who report co-worker violence experience large employment and earnings declines that persist for at least five years.

In this context, economic theory suggests that providing plausible deniability – making it harder to infer an individual’s intended report – as well as taking steps that increase trust that reports will not be leaked, can increase individuals’ willingness to come forward by reducing the risks of retaliation and reputational damage (Chassang and Padró i Miquel 2018, Chassang and Zehnder 2019).

This motivated us to test these possibilities in a phone-based survey experiment on reporting harassment conducted in partnership with a large, Bangladeshi apparel producer (Boudreau, Chassang, González-Torres, and Heath 2023). The firm’s senior management wanted to improve its HR policies for workers and to understand the extent to which harassment issues may have been present in the firm. We surveyed 2,245 of its workers about their experience of threatening behaviour, physical harassment, and sexual harassment by their direct supervisor.

In the survey experiment, we randomly assigned respondents to two different survey methods, either directly asking about their experience of harassment or providing plausible deniability through what we call “hard garbling” (HG), which we explain below. We also aimed to further reduce their perception of the likelihood that their report could be leaked through two approaches. First, we tested “rapport building” (RB), or taking time to build trust with survey respondents in a natural, but pre-specified, manner, beyond the typical amount in a social science survey. Second, we removed the survey module that collected personally identifying information about their production team – in particular, the name of their direct supervisor. We refer to this condition as the “Low PII” condition. We assured all respondents of a strong commitment to confidentiality, so the actual risks of leakage and of retaliation were extremely low.

We were also interested to understand how improved reporting data could be used to answer policy relevant questions about the nature of harassment, such as:

- How prevalent is harassment?

- What share of employees are responsible for the damage?

- How isolated are victims within teams?

What is “hard garbling” (HG), and how does it provide plausible deniability?

HG entails automatically recording a random subset of reports as complaints. Under HG, reports of harassment are always recorded, but reports of no harassment are sometimes switched to reports of harassment. We can then apply statistical formulas to estimate the prevalence of harassment from garbled reports. The HG method provides plausibility deniability about reporting harassment because, when fearing retaliation, individuals can always claim that they did not report harassment. This is credible because the system could have randomly assigned them to report harassment.

HG builds on decades of research that aims to develop new ways to elicit true responses to sensitive survey questions or to overcome the reality that collusion (e.g. working together to punish whistle-blowers), corruption, and other forms of misbehaviour among employees can prevent the collection of accurate data on harassment and other sensitive or controversial issues.

Dating back to work by Warner (1965), social scientists have shown that the prevalence of sensitive behaviour can be accurately estimated using garbled data. However, prior methods have focused on encouraging survey respondents to voluntarily randomise their answers, a method we call “soft garbling.” Although soft garbling can be helpful, recent evidence shows that respondents often decline to garble their own reports, and that the effectiveness of soft garbling in liberating voice can be short-lived in organisational settings. The HG methodology developed in our paper addresses this issue by automating the addition of noise. In addition, it enables us to track the counts of reports that are garbled, which greatly improves the accuracy of the estimates, especially when dealing with relatively rare reports (as in the case of harassment).

Trade-offs of secure survey design features

As explained above, in addition to HG, we tested the importance of rapport building (RB) and reducing the amount of personally identifying information (Low PII) collected by not asking the name of the worker’s direct supervisor. All these survey design features entail trade-offs.

For HG, in principle, a decision-maker could obtain the garbled survey data, which would have a share of reports coded as “yes” because the respondents reported being harassed, and a share of reports coded as “yes” because the respondents were randomly assigned by the survey system to do so. The decision-maker could use that data to target an intervention, for example, sensitivity training or a more thorough yearly review, in a “noisy” way. The employees linked to these reports include the perpetrators, but also some innocent individuals. This limits the severity of the intervention that the organisation can take based on the data. It is important that everyone in the organisation know that this is how the governance system is set up.

RB requires extra time to develop the script, to train enumerators on it, and to conduct the survey. Removing identifying information provides organisations with less data about the nature of an organisation’s harassment problem. In our case, the organisation no longer learns the name of the manager responsible for the harassment.

Effects of secure survey design on reporting

We randomly assigned participants to different combinations of HG, RB, and Low PII. In the control group, we directly asked survey participants about harassment, did not build rapport, and included the module asking their supervisor’s name.

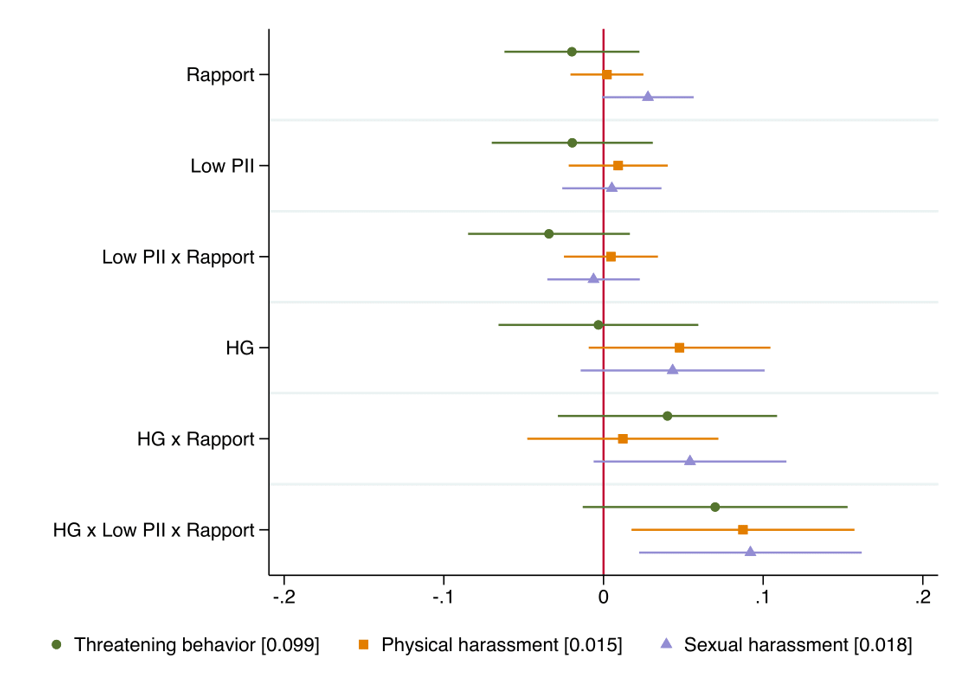

In the control group, 9.9% of workers reported experiencing threatening behaviour, 1.5% reported being physically harassed, and 1.8% reported being sexually harassed by their supervisor. Figure 1 displays the main results; there are three main findings:

- Providing plausible deniability through hard garbling substantially increases the share of workers reporting harassment: under HG, 13.6% reported threatening behaviour, 5.7% reported physical harassment, and 7.7% reported sexual harassment.

- Rapport building had a positive, but not statistically significant, effect on the reporting of sexual harassment, but no detectable effect on the reporting of other types of harassment.

- Removing questions about respondents' supervisor increased the reporting of physical harassment by a marginally statistically significant degree but had no detectable effect on the reporting of threatening behaviour or sexual harassment.

Figure 1: Effects of secure survey design features on reporting

Notes: This figure plots the coefficients from separate regressions of the normalised reporting outcome on the treatment indicators and strata fixed effects. Prior to running regressions, reporting outcomes for the HG arms are normalised to identify respondents’ intended responses; intended responses are equal to the intended response plus a mean-zero heteroskedastic error term. The whiskers are 95% confidence intervals estimated using robust standard errors. The omitted category is the control condition. The number in square brackets is the control group reporting rate for this type of harassment.

As is visible in Figure 1, while we do not find strong evidence that RB or low PII alone affect reporting, we find suggestive evidence that when combined with HG, they contribute to increased reporting.

Finally, we were interested in how reporting rates vary between men and women and whether the survey design features affect reporting differently by gender. In the control group, women were less than half as likely as men to report threatening behaviour or physical harassment, but slightly more likely to report sexual harassment. HG increases reporting for both men and women, and the effects are as large or larger for men compared to women. We cannot disentangle whether these differences are due to differential incidences of harassment or differential reporting. RB may have increased reporting of sexual harassment among women but backfired for men, potentially because the individuals conducting the surveys were women which may have made men less comfortable in reporting.

How can organisations use this survey information?

The anonymous apparel producer provides a case study of how organisations can use more informative survey data to answer policy-relevant questions.

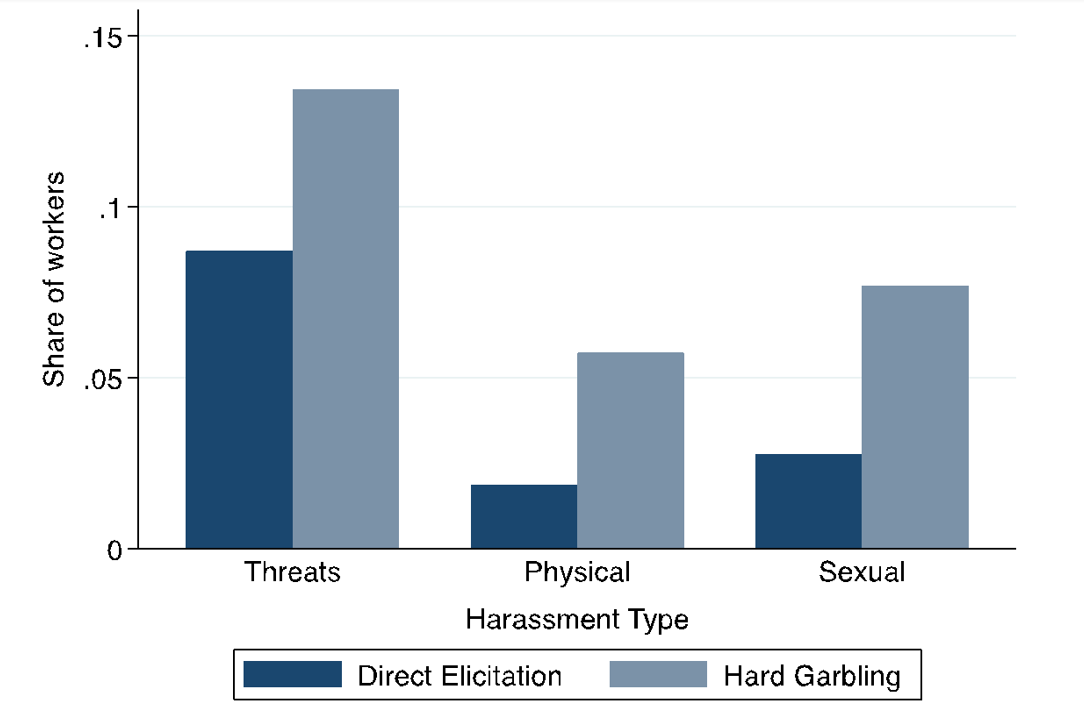

Figure 2 illustrates that harassment is meaningfully more widespread than standard surveys would suggest. This means that addressing harassment may have a much more positive impact on employee wellbeing than previously available data would indicate. Since both men and women report significant levels of harassment under HG, addressing harassment would likely benefit both sexes.

Figure 2: Share of workers who have been victimized by survey method

Notes: This figure reports harassment rates estimated using reporting with direct elicitation (control condition for the survey method for harassment questions) and hard garbling, respectively. We pool across all treatment arms, including the rapport building arms and the arms in which we do not collect team-level identifying information.

Turning to understanding harassment at the team-level, we find that harassment is widespread across production teams: we estimate that 72% of production teams have at least one worker who reports threatening behaviour, 25% have at least one who reports physical harassment, and 40% have at least one who reports sexual harassment. Evidently, harassment is widespread across teams.

Among teams that have at least one worker who reports, 17%/14%/8% have a second worker come forward for threatening behaviour/physical harassment/sexual harassment. No teams have three or more workers come forward for threatening behaviour and physical harassment, and very few do for sexual harassment. This indicates that the bulk of the challenge is dealing with widespread, medium intensity harassment, rather than punishing a small group of severe perpetrators. It also indicates that victims appear relatively isolated within teams, so policies that require multiple victims to provide corroborating evidence may miss most cases. A caveat when interpreting these statistics is that our estimates are still likely lower bounds to the true extent of harassment.

Final thoughts

A lack of plausible deniability causes severe underreporting of harassment in the organisational setting we study. We think that this finding is broadly relevant when members of organisations face risks of retaliation or reputational costs if they report sensitive information. Lack of trust in the integrity of the reporting system may also contribute, though our results suggest that the process of trust-building is highly contextual and may backfire when not well-targeted. It’s possible that measures to increase trust may matter more in settings where respondents are provided with weaker security assurances relative to ours.

We think that the question of how to scale up enforcement actions taken based on reports collected using HG is an important direction for future research. Given the “noise” baked into the HG method, the action needs to be an appropriate response to this type of information. Sending a manager to a training seminar, initiating a more thorough yearly review, or moving the worker associated with the report to a new team, for example, may be appropriate responses, whereas firing a manager would require additional investigation.

References

Adams-Prassl, A, K Huttunen, E Nix, and N Zhang (2023), “Violence Against Women at Work,” Tech. rep., mimeo.

Boudreau, L, S Chassang, A González-Torres, and Rachel Heath (2023), “Monitoring Harassment in Organizations”, Working Paper.

Chassang, S and G Padró i Miquel (2018), “Crime, Intimidation, and Whistleblowing: A Theory of Inference from Unverifiable Reports,” Review of Economic Studies, 86: 2530– 2553.

Chassang, S and C Zehnder (2019), “Secure Survey Design in Organizations: Theory and Experiments,” Tech. rep., mimeo.

Warner, S L (1965), “Randomized response: A survey technique for eliminating evasive answer bias,” Journal of the American Statistical Association, 60: 63–69.