Data from developing country settings highlight the risk of misinterpreting non-cognitive skills and personality using existing measures

Increasing human capital is often seen as key for reducing poverty. Large investments are made in training programmes aimed at improving skills ($1 billion a year by the World Bank alone). Skills are among the main drivers of productivity differences between sectors in the economy (Lagakos and Waugh 2013) and cognitive and non-cognitive skills can be linked to individuals’ success in life (Heckman et al. 2006). Cognitive skills in this literature refer to ‘hard’ skills such as abstract reasoning power, language and maths skills. By contrast, non-cognitive skills are more ‘soft’ or socio-emotional skills such as motivation and interpersonal interaction.

A rapidly growing literature in development economics also uses measures of skills and personality to explain economic performance, for example for screening purposes in the job market, credit eligibility, analysing treatment heterogeneity of interventions, and predict selection or performance in public sector jobs. Development economists and policymakers therefore are increasingly interested in measuring skills and personality, and in using those measures for policy design and evaluations.

However, skills and personality are complex and multidimensional, and therefore hard to measure. Typically, economic studies rely on self-reported measures through questionnaires, with a set of questions aimed at measuring a specific skill or personality trait. These can be tests where the respondent tries to find the correct answer, or questions asking the respondents to characterise herself, her views, or preferences. Psychometricians have developed scales to measure the different concepts, but most scales have been tested and validated only in developed country settings. For instance, the large literature on the universality of the Five Factor Model (FFM) for personality traits relies on data from college students in high- and middle-income settings (Schmitt et al. 2007).

Many questions can be raised about the applicability of some of the existing scales for poorer populations given the:

- high level of abstraction of many questions,

- low levels of education in many respondent populations,

- difficulties of standardisation for enumerator-administered tests conducted in the field, and

- translation challenges.

And yet, the standard practice has been to apply the common scales without prior validation in developing country contexts.

Testing the reliability and validity of skills measures in Kenya and Colombia

To address this lack of validation, we conducted survey experiments to test a wide range of commonly used measures of cognitive, non-cognitive, and technical skills on rural populations in Kenya and Colombia (Laajaj and Macours 2020). The tests were applied twice within less than a month to test reliability (through comparison of answers to the same questions). To test validity, we analysed the degree to which the tools measure what they claim to measure. For instance, we checked correlation between different measures of the same skill, and tested whether the tools predict outcomes that are likely affected by the skill. Finally, we test whether items that aim to measure a given skill or personality trait are more correlated with each other than with questions aimed at measuring a different skill.

Cognitive skills versus non-cognitive skills

Our results show that cognitive skills, using both standardised scales and tests developed for the specific context, can be measured with high levels of reliability and validity. Indeed, this is similar to findings in developed country settings. Cognitive skills also show good predictive validity, even after controlling for educational levels. This is good news for development economists collecting survey data on cognitive skills.

On the other hand, we find that standard application of commonly used scales of non-cognitive and technical skills suffers from large measurement error, resulting in low reliability and validity. For technical skills (agricultural knowledge in our context), using more structural methods to reduce measurement error results in a construct with higher predictive validity, even if large measurement error remains.

The measures of non-cognitive skills are perhaps the most worrying, as answers appear to be driven by a mix of the true underlying skill and answering patterns. For example respondents with lower cognitive skills are more likely to agree, even to contradictory statements. Moreover, enumerator interactions can explain some of the identified measurement problems. The use of such measures may then lead to erroneous conclusions.

Broadening the evidence base

While these findings were highly consistent between Kenya and Colombia, one may worry about their wider external validity. We hence analysed 29 face-to-face surveys from 94,715 interviewees in 23 low- and middle-income countries across the globe in Laajaj et al. (2019), focusing on personality measures. This includes datasets from 14 World Bank's Skills Measurement Programme (STEP) surveys, and 15 datasets collected for various purposes and used in top publications. All instruments measure personality through items of the Big Five Inventory (BFI).

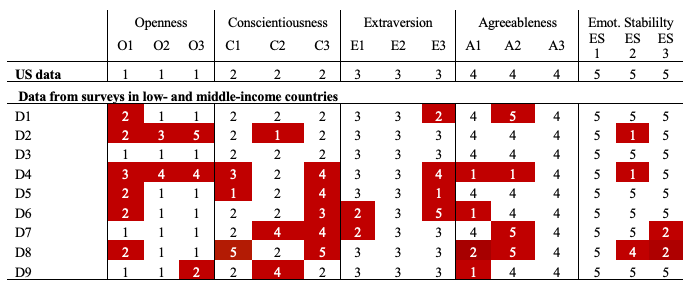

We show that these widely used questions to measure personality (Openness, Conscientiousness, Extraversion, Agreeableness, and Neuroticism) generally do not accurately measure the intended personality traits when collected through face-to-face surveys. In 21 of the 23 datasets, at least one item correlates more with items intended to measure a different personality trait than with items intended to measure the same personality trait (see Figure 1 for examples).1

Figure 1 Factor structures of personality questions and comparison with theoretical scale

Note: Each column represents one question of the Big Five index (the same across datasets), sorted by Personality Traits. Each line represents a different survey. Cells are filled in red if the survey question maps into a different personality trait than the one it was intended to measure. Any line with at least one red cell did not get the proper sorting of questions into the trait that they are expected to measure. See Laajaj et al (2019) for further explanation.

Applying the same criteria that psychometricians used to validate the Big Five in developed countries, we see that the vast majority of survey data from developing countries does not pass the test. A set of items meant as proxy for a specific personality trait can capture other factors and lead to incorrect inferences. The lack of support for the Big Five factor model across a large set of surveys in diverse contexts indicates that the issues identified are not unique to a specific data collection exercise. Instead, it points to a general problem in the measure of personality traits through survey data in developing countries.

Policy implications: The risk of misinterpreting non-cognitive skills

These findings entail key lessons for researchers aiming to understand the role of skills in low- and middle-income countries. Our work highlights the risk of misinterpreting non-cognitive skills and personality survey data and provides a warning against naïve interpretations without evidence of validity. Data validation in each context is therefore a must. Inference is only credible if the data has a clear structure and good reliability and validity.

Some precautions that can help reduce enumerator and response biases are to:

- improve the quality of translations and comprehensibility for respondents with lower cognitive ability,

- apply structural methods on cognitive and knowledge tests to improve the reliability of the measures, and,

- randomise the assignment of enumerators in field experiments.

More broadly, the findings call for the development of innovative methods that more accurately capture specific skills and personality traits in developing country surveys. This would need to focus on reducing the impact of response patterns, enumerator interactions, and be more adapted to the context and mode of administration.

References

Heckman, J, J Stixrud and S Urzua (2006), “The effects of cognitive and non-cognitive abilities on labour market outcomes and social behaviour”, Journal of Labour Economics, 24 (3): 411-482.

Laajaj, R and K Macours (2020), “Measuring skills in developing countries”, Journal of Human Resources, forthcoming.

Laajaj, R, K Macours, O Arias, S Gosling, D Pinzón Hernández, J Potter, M Rubio-Codina and R Vakis (2019), “Challenges to capture the big five personality traits in non-WEIRD populations”, Science Advances 5: eaaw5226.

Lagakos, D and M Waught (2013), “Selection, agriculture, and cross-country productivity differences”, American Economic Review 103 (2): 948-980.

Schmitt, D, J Allik, R McCrae and V Benet-Martínez (2007), “The geographic distribution of Big Five personality traits: Patterns and profiles of human self-description across 56 nations”, Journal of Cross-Cultural Psychology 38(2): 173-212.

Endnotes

[1] We further compare with data from 198,365 self-selected respondents of internet surveys from the same countries. This data do reflect the factor structure commonly found in the US and other Western Educated Industrialized Rich and Democratic (WEIRD) populations. This suggests that cultural and language differences are not the main driver of low validity in face-to-face surveys in low- and middle-income countries.