Stark gender disparities are evident on a Chinese online healthcare platform, with algorithms disproportionately favouring male physicians.

Gender pay gaps are widespread across countries, with women earning 20% less than men on average (ILO 2018). The rapidly expanding gig economy, which connects workers with employers via online platforms, was expected to bridge the gaps due to its flexibility— which is a particularly important factor for women’s labour force participation (Heath et al. 2024). However, recent research shows that gender gaps persist on platforms like Upwork (Foong et al. 2018), Amazon Mechanical Turk (Litman et al. 2020), and Uber (Cook et al. 2021). My research (Chen 2024) examines a group of high-skill workers (physicians) on an online healthcare platform and reveals how the platform’s algorithm exacerbates, rather than alleviates, gender disparities in the presence of discrimination.

Context and data

In China, like many other low- and middle-income countries, the healthcare system grapples with challenges such as severe overcrowding in urban hospitals and scarce quality medical resources in rural areas. Online healthcare platforms offer a practical solution by enabling patients, especially those with mild symptoms, to access medical consultations remotely. On these platforms, patients can consult with any available physician via text or telephone, and physicians are required to respond within a specified timeframe. This shift helps to reduce the strain on physical facilities while extending essential services to underserved areas, leading to substantial growth in the e-consultation healthcare market. By 2020, platforms like WeDoctor and Spring Rain Doctor (SRD) had approximately 55 million monthly active users. However, gender discrimination may be more pronounced on these platforms, as they allow patients to choose specific doctors, unlike general outpatient visits at hospitals where patients typically cannot select their physician.

To study differences in prices and patient demand between male and female physicians, I use novel data collected from SRD, whose design is representative of a broad class of e-consultation healthcare services. I conducted two rounds of data collection, one in March-June 2020 and the other in February-April 2023. I then constructed a cross-sectional dataset by merging the 2020 data with supplementary data from 2023. My sample consists of 13,472 unique physicians who were available to provide services at least once on the platform between March 26 and June 30, 2020. In addition to collecting physician profiles, I also recorded each physician’s rank in search results under the platform’s default settings. Although about 38% of the physicians in the sample are female, only 30% of those displayed in the top 50 search results are women.

Female physicians receive fewer consultations despite setting lower prices

I first find that despite setting lower prices, female physicians on the SRD receive fewer consultations compared to their male counterparts. Specifically, female physicians on average charge 8% less and provide 14.2% fewer services compared to males within the same specialty. Even after accounting for factors such as education, professional title, work experience, availability, province, and the year they joined, there remains a 2.3% gender price gap and an 11.0% gap in the number of monthly consultation services provided between male and female physicians (hereafter, gender quantity gap). These gaps combine to mean that female physicians earn 13.0% less than male physicians each month on average. Put differently, the difference amounts to ¥83.6 per month, equivalent to the cost of four decent Big Mac Meals.

Next, I examine whether the gender gaps are attributed to patient discrimination against female physicians. One might be concerned that the observed gaps discussed above may stem from unobserved differences in physician quality. To address this concern, I construct a latent measure of quality by comparing physicians’ profiles from 2023 with those from 2020. The idea is that changes in physicians’ profiles, such as moving from a lower-ranking hospital to a higher-ranking one between 2020 and 2023, reflect their true quality. I find that after conditioning on this variable, both the gender price and quantity gaps persist at similar levels to those observed without this measure. This provides supportive evidence of patient discrimination on the SRD.

Is the ranking algorithm sexist?

Given the presence of patient discrimination, I then explore the role of the platform, specifically examining whether it has the potential to intensify patient discrimination and widen gender disparities. Using the Gelbach decomposition method (Gelbach 2016), which addresses concerns about potentially correlated independent variables, I find that a physician’s ranking is the primary contributor to the gender quantity gap.

Previous research highlights growing concerns about algorithmic fairness, especially when algorithms are trained on data generated by biased human decision-makers (Cowgill and Tucker 2020, Rambachan et al. 2020). Similar to other machine-learning algorithms, the ranking algorithm used by the SRD employs a set of features to predict outcomes, aiming to minimise deviations between actual future patient demand and predicted patient behaviors. One key feature it uses is the volume of past consultations. This means that the ranking algorithm, which is supposedly free of gender bias, is subject to the influence of patient discrimination through past patient demand. I find that female physicians are ranked lower in search results, both statistically and economically significantly; however, when accounting for past consultations, the gender gap in ranking disappears. Additionally, there is a positive correlation between physicians' rankings in 2020 and their rankings in 2023. Thus, the algorithm prioritises physicians, especially male physicians, who receive more patient inquiries per month. The bias also makes it challenging for female physicians to improve their rankings over time.

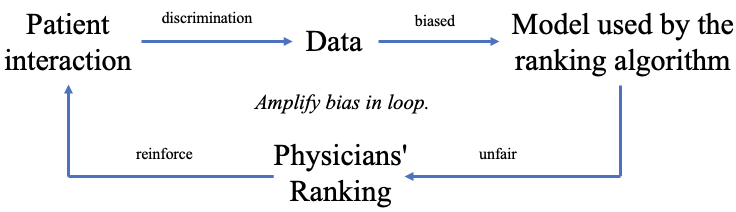

The findings discussed above are realised through the feedback loop illustrated in Figure 1. For example, when some patients discriminate against female physicians and intend not to buy their services, this would harm their rankings. The negative impact on female physicians’ rankings would then influence future patients’ decisions since it takes time and effort for patients to explore all the listed physicians. This, in turn, feeds back into the platform’s data used for future rankings, creating a vicious feedback loop. As a result, discrimination by some patients could lead to female physicians being ranked lower overall, exacerbating and perpetuating gender gaps.

Figure 1: Platform’s Ranking Algorithm: Feedback Loop

Policy implications: Breaking the loop

My research provides evidence that the gig economy is not a panacea for gender equality. While it offers flexibility, it also reflects the biases of broader society, which are magnified by the algorithms that power it. This highlights the importance of improving and regulating the algorithmic designs of burgeoning online platforms, with a specific focus on ensuring algorithmic fairness. Effective measures, such as debiasing data and models, anonymising gender, and conducting regular audits, are essential to break the self-reinforcing feedback loop. Addressing these issues is crucial for realising the gig economy's potential to advance gender equality.

References

Chen, Y (2024), "Does the gig economy discriminate against women? Evidence from physicians in China", Journal of Development Economics, 169: 103275.

Cook, C, R Diamond, J V Hall, J A List, and P Oyer (2021), "The gender earnings gap in the gig economy: Evidence from over a million rideshare drivers", The Review of Economic Studies, 88(5): 2210-2238.

Cowgill, B and C E Tucker (2020), "Algorithmic fairness and economics", Columbia Business School Research Paper.

Foong, E, N Vincent, B Hecht, and E M Gerber (2018), "Women (still) ask for less: Gender differences in hourly rate in an online labor marketplace", Proceedings of the ACM on Human-Computer Interaction, 2(CSCW): 1-21.

Gelbach, J B (2016), "When do covariates matter? And which ones, and how much?", Journal of Labor Economics, 34(2): 509-543.

Heath, R et al. (2024), "Female Labour Force Participation", VoxDevLit.

Litman, L, J Robinson, Z Rosen, C Rosenzweig, J Waxman, and L M Bates (2020), "The persistence of pay inequality: The gender pay gap in an anonymous online labor market", PloS one, 15(2): e0229383.

Rambachan, A, J Kleinberg, J Ludwig, and S Mullainathan (2020), "An economic perspective on algorithmic fairness", AEA Papers and Proceedings, 110: 91-95.

International Labour Organization (2018), "Global Wage Report 2018/19: What lies behind gender pay gaps", Geneva: International Labour Organization.