Self-interviewing survey techniques substantially increase reported levels of intimate partner violence, but this may be driven by respondents’ misunderstanding

Intimate partner violence (IPV) is a pressing global public health problem, but measuring its true prevalence is challenging. IPV is often measured in surveys where a human enumerator asks whether a specific violent incident happened in a given time period and the respondent affirms or denies it. The latest estimates from these surveys show that globally more than 1 in 4 women have experienced physical or sexual IPV during their lifetime (Sardinha et al. 2022).

Public health professionals express concern that the actual incidence of IPV could be higher, as women might downplay their experiences of IPV even when responding in surveys. Yet it is unclear if this is the case. For example, professionally conducted surveys are designed to ensure confidentiality, and it’s possible that women may be willing to report IPV experience to a surveyor she would not interact with again. Surveys also relieve the woman of the burden of initiating an uncomfortable conversation herself. On the other hand, some stigmas may still apply—the survivor may feel ashamed, reluctant to confide in others, or fearful of being overheard, undermining their willingness to disclose their experiences of IPV.

An alternative measurement tool: ACASI

An alternative approach is self-interviewing, where women self-administer IPV questions privately with their responses shielded from the human enumerator. In our study (Park et al. 2023), we evaluate one such interviewing tool known as Audio Computer-Assisted Self-Interviewing (ACASI).

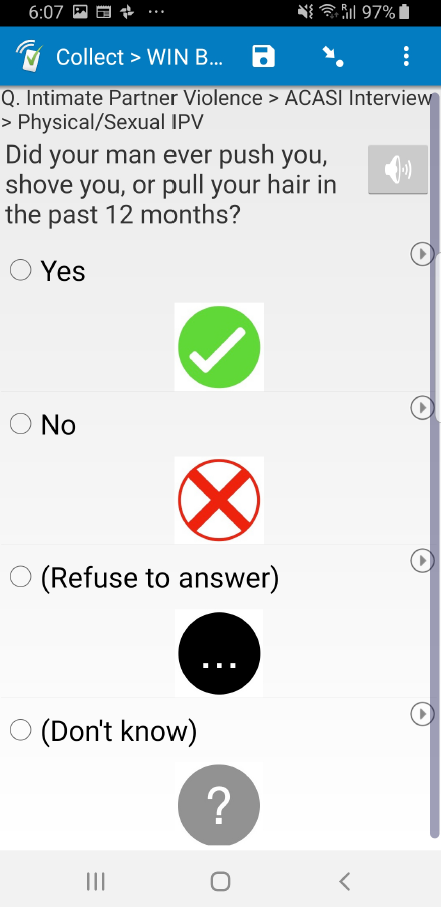

In ACASI, respondents use headphones to listen to pre-recorded questions and respond using a touchscreen device (an Android tablet in our setting). Figure 1 displays the tablet interface, featuring a speaker icon the respondent can tap to listen to the question and four images from which the respondent selects her answer by touching the screen directly. The enumerator has minimal interaction with the participant, except for offering an initial explanation of the module and being available for clarification in case the participant seeks assistance.

Figure 1: Self-Interviewing Module

How could ACASI affect IPV reporting?

Self-interviewing, or ACASI in particular, can affect IPV reporting in several ways, especially in comparison to conventional face-to-face interviewing. First, ACASI provides privacy and confidentiality, which could mitigate factors including social taboos, emotional pain, fear of retribution, or feelings of shame or embarrassment. This channel would increase IPV reporting. Second, self-interviewing removes a human element—such as the enumerator being empathetic or developing a rapport with the respondent throughout the survey—which would have allowed respondents to feel more comfortable disclosing sensitive information. If this channel is significant, respondents could be less likely to report IPV experiences via ACASI.

A third factor, which is the main focus of our paper, is that ACASI requires the respondent to comprehend the questions independently and to use the tablet, which may not be straightforward in a context where digital literacy is low. In almost every setting, miscomprehension would increase IPV reporting. This is because IPV is measured through a module consisting of a number of yes/no questions, which are later indexed into 4 categories: controlling behaviour, emotional IPV, physical IPV, and sexual IPV (WHO 2005). If the respondent does not fully understand each question or makes mistakes using the tablet (e.g. tapping yes instead of no), this would induce random noise towards 50%. Typically, the proportion of respondents responding yes to each individual question is well below 50% (in our study sample, on average 7% in Malawi and 14% in Liberia). Therefore, random noise will tend to increase measured prevalence.

Measurement experiment: self-interviewing vs face-to-face interviewing

To study these channels, we ran a measurement experiment within surveys conducted as part of an evaluation of an unconditional cash transfer program in rural Malawi and Liberia (Aggarwal et al. 2023). Female respondents were individually randomised into whether the IPV survey module was asked via self-interviewing (SI) or face-to-face interviewing (FTFI). We employed the standard questionnaire from WHO’s Violence Against Women module, and focus on questions asked for the past 12 months prior to the survey time. We index question types into a summary measure (a binary variable equal to 1 if a respondent experienced any type of violence under that category).

IPV reports in self-administered surveys are higher

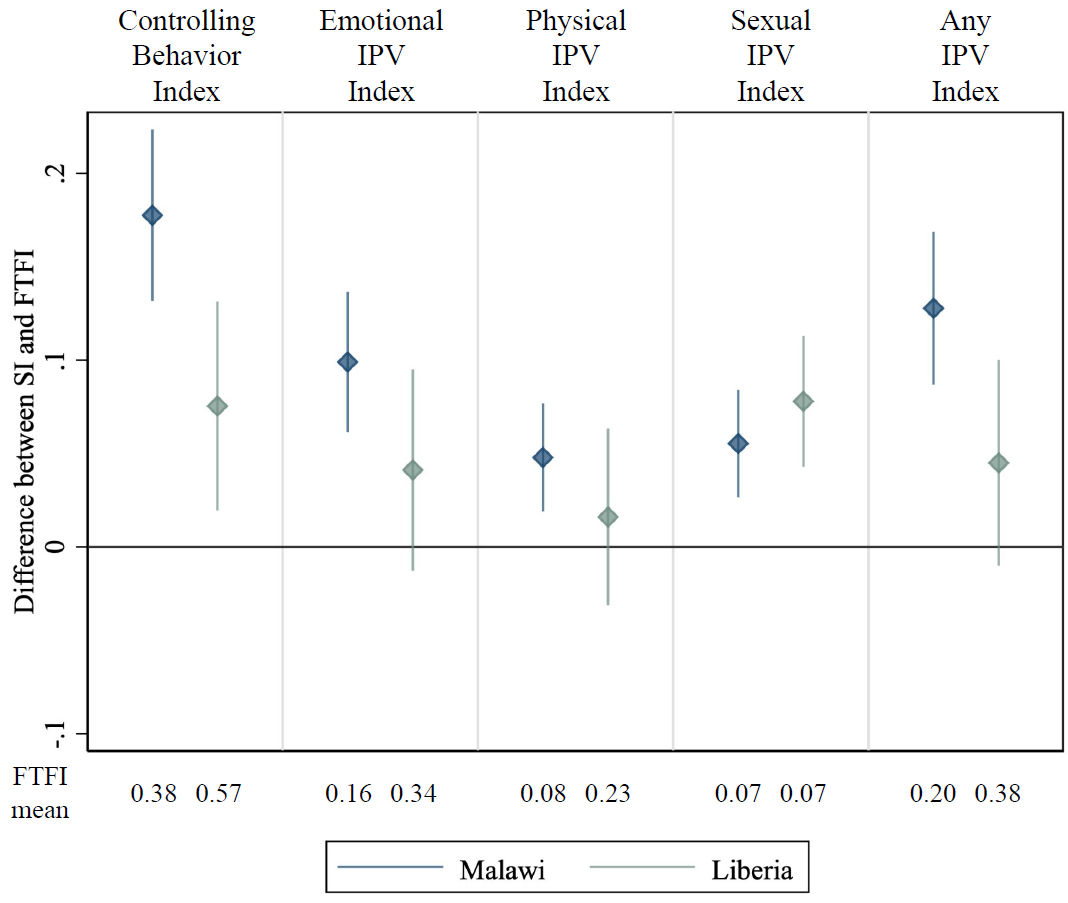

Figure 2 shows the results. For all categories, SI increases measured IPV: for example, the “any” IPV index (i.e. whether the respondent has experienced any emotional, physical, or sexual IPV in the past year) is 20% under FTFI vs. 33% via SI for Malawi, and 38% FTFI vs. 42% in SI for Liberia. This difference is statistically significant in Malawi, but not in Liberia.

Figure 2: Effect of Self-interviewing on IPV Indices

Note: The diamond bullet indicates the point estimate of the difference between SI and FTFI, and the vertical line is the 95% confidence interval.

Why is reported prevalence higher?

We do find respondents are more likely to report IPV incidences when asked via SI. While these results are consistent with the channel that SI destigmatises IPV reporting, we also find evidence that respondents were making mistakes in ACASI.

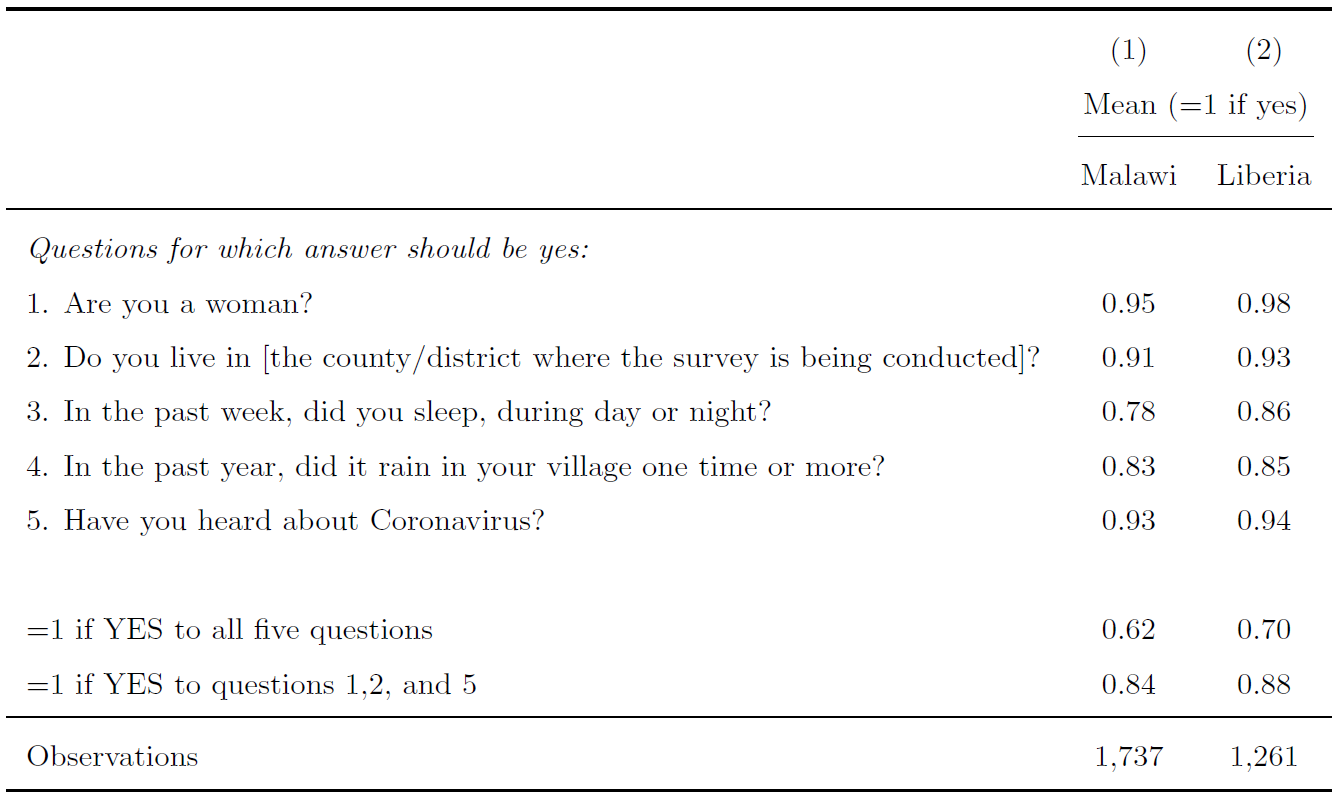

Before randomising respondents into either SI or FTFI, we administered a set of questions in SI to all respondents to check whether they are making mistakes. While these questions were carefully chosen to be those for which correct responses are yes, Table 1 shows that a non-neglible proportion of participants were tapping no on the tablet.

Table 1: Self-interviewing Comprehension Questions

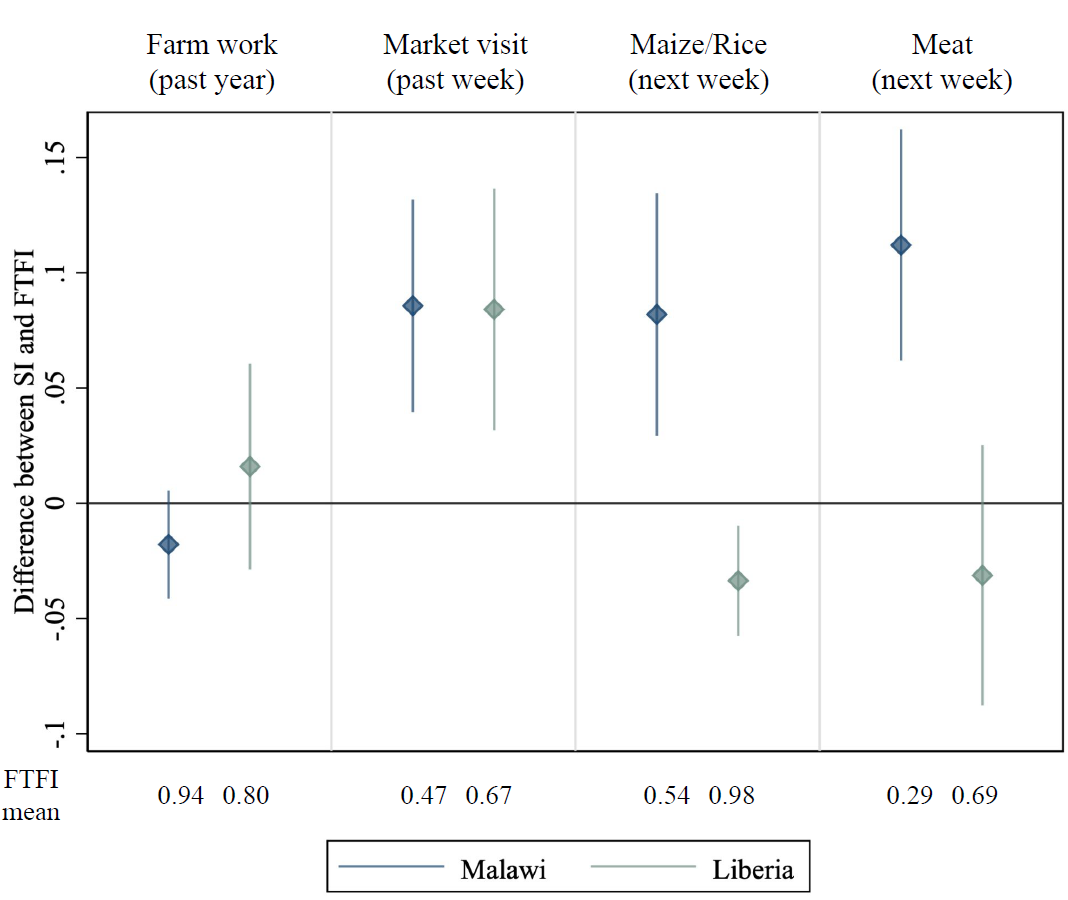

Furthermore, after randomising respondents into either SI or FTFI, we asked a set of innocuous questions in each survey modality. In Figure 4, we find sizeable, statistically significant differences between SI and FTFI for 3 of 4 placebo questions in Malawi, and for 2 of 4 in Liberia. The presence of such placebo effects suggests that ACASI might not be reliable in this setting.

Figure 3: Placebo Effects of Self-interviewing

Note: The diamond bullet indicates the point estimate of the difference between SI and FTFI, and the vertical line is the 95% confidence interval.

Overall, then, we find evidence that women seem to make mistakes when answering via SI, and that SI increases errors on placebo tests. In fact, the point estimates on the placebo effects are in many cases similar to our main treatment effects in magnitude. We therefore conclude that SI is unreliable in this setting, and can’t rule out that the IPV effects are also driven by a similar bias.

Policy implications

Our paper tests the efficacy of SI in eliciting truthful responses regarding IPV experiences among women in rural Malawi and Liberia. While we do find that respondents are more likely to report IPV experiences in SI than in FTFI, we also find evidence that they were making mistakes when using the SI tool. In a context where the true prevalence is well below 50% (like IPV in many settings as well as ours), random noise caused by incomplete comprehension of the survey modality would likely induce an upward bias.

This is deeply concerning because it’s the same direction as the destigmatisation channel, and so what looks like a decrease in stigma could be purely fictional. In sum, non-conventional methods to collect data about stigmatised behaviours should be implemented with caution as they may open up unexpected channels of bias. It is also advisable to accompany new methods with extensive testing and other ways of ground-truthing prior to widespread implementation.

References

Aggarwal, S, J Aker, D Jeong, N Kumar, D S Park, J Robinson, and A Spearot (2023), “The Dynamic Effects of Cash Transfers: Evidence from Rural Liberia and Malawi.” Unpublished.

Sardinha, L, M Maheu-Giroux, H Stockl, S R Meyer, and C Garcia-Moreno (2022) “Global, regional, and national prevalence estimates of physical or sexual, or both, intimate partner violence against women in 2018.” Lancet 399(10327): 803–813.

Park, D S, S Aggarwal, D Jeong, N Kumar, J Robinson, and A Spearot “Private but Misunderstood? Evidence on Measuring Intimate Partner Violence via Self-Interviewing in Rural Liberia and Malawi”, Working Paper.

WHO (2005), “WHO Multi-country Study on Women’s Health and Domestic Violence against Women.” World Health Organization.